Voxel Cone Tracing for Real-time Global Illumination

By Toby Chen, Bob Cao, Gui Andrade. CS184/284A Spring 2019

Abstract

We present a real time renderer written completely from scratch. This project combines several strategies for rendering a scene with realistic global illumination, including Voxel Cone Tracing, Ray Marching, Ground-Truth Ambient Occlusion, and other shading effects. We build upon RHI ("Rendering Hardware Interface"), a custom-designed game engine and hardware rendering framework. This modular system allows for highly compute-intensive graphics with minimal CPU overhead and maximum GPU utilization. Our codebase is additionally designed to be easily extensible, so that additional render pipelines can be configured with small amounts of additional code.

Technical Summary and Implementation Pitfalls

Our codebase is based upon a principle of separation of concerns. Our graphics state management framework, RHI, separates away game logic from graphics logic. Within RHI, a domain-specific language, "pipelang", separates away pipeline management logic. SPIR-V shaders separate away the post-processing logic.

Central to our desired photorealism were a couple of core algorithms. Additionally, our use of volumetric fog, atmospheric scattering, and physically-based rendering was integral to polishing our results.

Voxel Cone Tracing

Using RHI, we are able to render our desired scene into a 3-dimensional array of voxels (volume pixels) which describe the scene in coarse resolution. It is much less computationally intensive to ray-trace lighting in the scene with this decreased level of detail, to the point where a modern Nvidia GPU can render realistic lighting effects at ~30FPS without the use of any specialized raytracing hardware. See additional implementation details below.

One particular issue we faced when implementing VCT was the appareance of gaps among the voxels, which degraded the quality of our ray tracing. We solved this by rerasterizing the scene from the perspective of dominant normal vectors, rather than the camera view vector.

We also faced very low sampling resolution for fired rays, which was solved by very aggressive smoothing by means of both a spacial and temporal anti-aliasing algorithm.

Ground Truth Ambient Occlusion

In order to provide more fine-grained lighting detail in areas with poor ray visibility, we use an ambient occlusion heuristic: Ground Truth Ambient Occlusion. This recently-published algorithm computes whether a pixel should be visible by looking at the pixel's "horizons" -- the visibility cone within a three dimensional hemispheric volume around the pixel. Roughly, GTAO weights together the pixel's normal direction with its visible horizon angles to determine whether it can reasonably be seen. See additional implementation details below.

Our initial implementation of GTAO saw significant issues based on a misunderstanding of the coordinate spaces of our various input data. We discovered that Vulkan points the texture coordinates' y axes in the opposite direction to what we expected, and that our initial implementation assumed our input maps to be in camera space when really they were in screen space.

Technical Detail

Global Illumination

Gloal Illumination is implemented using a voxel based approach. The entire scene is voxelized on the GPU every frame and a few different tracing techniques are used to gather radiance and other attributes from the voxel map and shade each on-screen fragment.

For a traditional ground-truth path tracer, we emit rays into the scene according to the projection, and then check the intersections & gather illuminance. Without specialized hardware to handle ray-scene intersection, naive path tracing will take too much computational power due to the tremendous cost of ray-scene intersection. Although hardware accelerated scene intersection is supported in RTX (Feature of Nvidia Turing architecture), we decide not to use that system due to lack of support on older / less powerful graphics cards.

The solution is to use voxel cone tracing. Path tracer is basically doing a numerical integration approximation by using Monte Carlo integration. This works on continous scene representation as we can represent all primitives as well defined continous surfaces. If we discretize (or in other term -- point sampling) the scene and turn them into voxels, the ray-scene intersection process can be speed up. Due to the low-frequency nature of indirect illumination, discretizing the scene using voxels representation will still provide a very close approximation.

Voxelization of the Scene

We take advantage of the GPU rasterizer to efficiently voxelize the scene into a 3D texture. Framed differently, voxelization is equivalent to rasterization with one extra dimension along the depth, which the rasterzier already provides as $z$ value. To implement voxelization, an additional render pass is used to feed the scene geometry down the voxelization pipeline. A geometry shader is used to project each triangle along the axis that maximizes the number of fragments it covers, in order to minimize the number of voxels being skipped due to low sampling rate. An additional flag is passed into the fragment shader indicating the axis along which the fragment is projected. Then, the fragment shader unprojects the fragment back into world space in order to calculate its voxel position, and writes the attributes such as color and normal into the corresponding voxel.

Certain algorithms require the voxel texture to be prefiltered to reduce the number of samples needed. The hardware blitting feature exposed by RHI and the underlying APIs is used to down-sample the resulting voxel texture with a linear filter.

Voxel Ray Marching

In the current implementation, we supported PBR rendering (energy-conserving) of indirect lighting with only the lambertian diffuse model. As the voxels have limited resolution, a naive ray marcher with roughly the step size of a single voxel can do a pretty awesome job. It marches each time by a step and check if the ray marcher entered a new voxel. As it enters new voxel, it checks the intersection (whether there is a voxel present in that place). The voxels also stores the normals, and therefore we can achieve PBR indirect illumination.

Voxel Cone Tracing

Another way to gather radiance data from the voxel volume is voxel cone tracing. In this approach, we approximate the incoming radiance over a cone with just a single ray. Any arbitrary numerical precision can be achieved by adjusting the radius of the cone at unit distance. We start ray marching at some short offset away from the surface, and the voxel volume is sampled at every step along the ray. As the sample point marches away from the vertex of the cone, the base of the cone gets larger and larger, covering a wider volume of voxels. This concept connects to the mipmaps of the voxel volume quite naturally: sampling from a low resolution mipmap level covers more voxels than from a high resolution one. Additionally, the alpha channel of the voxel volume is used to denote the occulsion factor, i.e., how "filled" is a voxel. As we ray march, we keep accumulate the radiance and the occlusion value using volumetric front-to-back accumulation: $c =

αc + (1 − α)α_2c_2$ and $α = α + (1 − α)α_2$, where $c_2$ and $\alpha_2$ are sampled values from the voxel texture. And we stop ray marching until either $α$ reaches one or we are out of the voxel volume.

Spatial Filtering & Temporal Accumulation

The raw output of the path tracer will be extremely noisy and unstable due to the very low sample rate for performance reasons.

The solution is to first introduce a temporal denoiser. The temporal denoiser uses the screen samples from previous frame by tracing velocity vector and using screen space reprojection. After the temporal filtering, we add another layer of spatial filtering which uses a depth-based bilateral gaussian blurring on the result of temporal filtered image.

To further improve the result and stability of the image, we used a blue noise generator for providing the random samples and dithering used in the voxel tracer. This low discrepancy noise makes the samples more distributed and having higher random frequency. This helps the temporal and spatial denoiser to achieve a smoother image.

Ground Truth Ambient Occlusion

At first starting with an implementation of the simpler (but less accurate) Horizon Based Ambient Occlusion, we extended our implementation according to Activision's novel Ground Truth Ambient Occlusion algorithm.

For a brief description of how the algorithm calculates visibility of a pixel:

- The shader computes a random angle $\phi$ about the viewing axis. It searches pixels to the left and right, along a line parametrized by this angle.

- For each pixel $P_{found}(x, y, z)$, it computes the pixel's height $d$ relative to $P_{curr}(x, y, z)$, using a depth map of the whole scene.

- It computes the angles $h_1$ and $h_2$ which correspond to angles from the base of $P_{curr}$ to the top of the $P_{found}$ with the highest $d$. $h_1$ applies to the "left" direction and $h_2$ to the "right".

- It clamps the horizon angles to +/- 90 degrees from the pixel's normal vector $\vec n$. These normal vectors are provided by a normal map of the whole scene.

- It computes the angle $γ$ of $n$ projected onto the sampling slice, and finally the visibility term $V$ =

$\frac 1 4 (-\cos(2h_1 − γ) + \cos(γ) + 2h_1 \sin(γ))$ $+$ $\frac 1 4 (-\cos(2h_2 − γ) + \cos(γ) + 2h_2 \sin(γ))$ - The shader's output occlusion term integrates multiple $V$ samples, with some additional weights for projected $n$ length and object thickness as additional heuristics to improve results.

This ambient occlusion output is run through an additional 4x4 box blur pipeline stage, and finally a colorization stage where the visibility term is multiplied against albedos from the original scene render.

RHI

RHI stands for "Render Hardware Interface." It is our in-house solution to target multiple rendering APIs and platforms, and it currently supports both a Direct3D 11 and a Vulkan backend. Pure Vulkan requires the programmer to write 1000+ lines of code just for a simple "Hello Triangle" demo. While controlling fine-grained GPU functionality can often be useful, a graphics programmer would often much rather work with a succinct API than deal with GPU memory barriers and memory management when implementing some rendering algorithm. RHI makes GPU programming more friendly by abstracting away unnessarily verbose details of each low-level rendering API, yet it still exposes a low-level view of the hardware context so as to not compromise on performance.

Below is a feature comparison between each API:

| Feature | RHI | Vulkan | D3D11 |

|---|---|---|---|

| Command Queue | Explicit | Explicit | None |

| Command Recording | Async command contexts that record into command lists | Directly write into VkCmdBuffer | Immediate mode |

| Pipeline States | Monolithic | Monolithic | Separate |

| Resource Binding | Descriptor Sets | Descriptor Sets | Individually bound |

| Memory Barriers | Not required | Required | Not required |

| Resource Management | Automatic (as in you can free even if in use by the GPU) | You risk hanging the GPU if you prematurely free something | Automatic |

| VRAM Allocation | Managed | Explicit | Managed |

The source code for RHI is available in a separate repository at: https://github.com/cyj0912/RHI

Pipelang

A DSL for effective graphics pipeline programming.

Shader combination as a problem quickly arises as the render engine gets more and more complex. Shader componentization and modularization is a well researched area [Citation], yet a consensus on design has not been reached. We present our solution, Pipelang, that allows the developer to author shaders in logical stages. As a motivating example, let us consider two mesh formats: StaticMesh and SkinnedMesh, two materials: ConstantMaterial and TextureMaterial, and two render passes: GBufferPass and VoxelizePass. Intuitively, any combination of the three components should be a valid shader, and each component carries some parameters that can be set by the host application. Pipeline provides a lua-based language that allows the developer to write exactly such components and the Pipelang runtime assembles the stages into shaders and descriptor set layouts upon the host application's request. Shader caching and the offline generation of shaders is also supported.

Event & Component system

In order to achieve further expandability & dynamic control of the game, the engine organizes its behaviour and its data using another tree structure which is called "game graph". The game graph is composed of GameObject, which contains basic graph structure and reflections.

There are two specific types of GameObject:Event and Behaviour. The game developer will setup the root events containing render event, logic update event, initialization event, etc. The core game logic which controls the loading of the game scene and movement of the camera is composed in a behaviour called MainBehaviour. This behaviour uses the reflection system to register its handles into the root events, which then calls the appropriate renders or game logic code to make the game run.

By doing this, we achieved asynchronous game logic & input handling, as each event can be triggered in different threads. This abstracts the linear programming paradigm away from the core game loop and therefore achieves higher expandability and modularity.

Further works including combining the game graph and scene graph, creating a more robust event system, work scheduling, and atomic operation / locking of game states can be done to expand the engine into a true data-driven engine. This can further utilize the computational resources and makes the developer's life easier.

Post-Processing

As the global illumination system and lighting is all based on PBR, we need tonemapping and gamma correction to create a visually acceptable image as the brightness of the sun light and the ambient light has extremely large difference in linear color space, where our integrator operates in.

All the textures are stored in sRGB with $gamma=2.2$, and therefore we first convert that into linear space. After all lighting and post processing, the HDR buffer is feed into a simple fitted ACES tonemap curve and then converted back into gamma space for screen display.

In addition to tonemapping, we utilizes the shadow map of the directional light source to create atmospheric scattering effects using a ray marcher. The ray marcher is dithered and only uses 16 samples. We also added an analytical skybox using atmospheric scattering (rayleigh & mie scattering) to compute a real time skybox based on the position of the main directional light (sun). This provides us a very natural image with realistic environment.

The very end of the rendering pipeline is a temporal anti aliasing system. The TAA uses reprojected samples from previous frames, and it decides its weight based on the local pixel velocity and depth changes to remove ghosting effect. We added a frame count based jitter in the vertex shader output for the mesh to achieve anti aliasing, and the TAA will also help to denoise the post-processing results.

With the TAA's pixel velocity data, we can easily achieve motion blur by sampling along the screen space pixel velocity vector, which improves the experience when dealing with low framerates.

Results

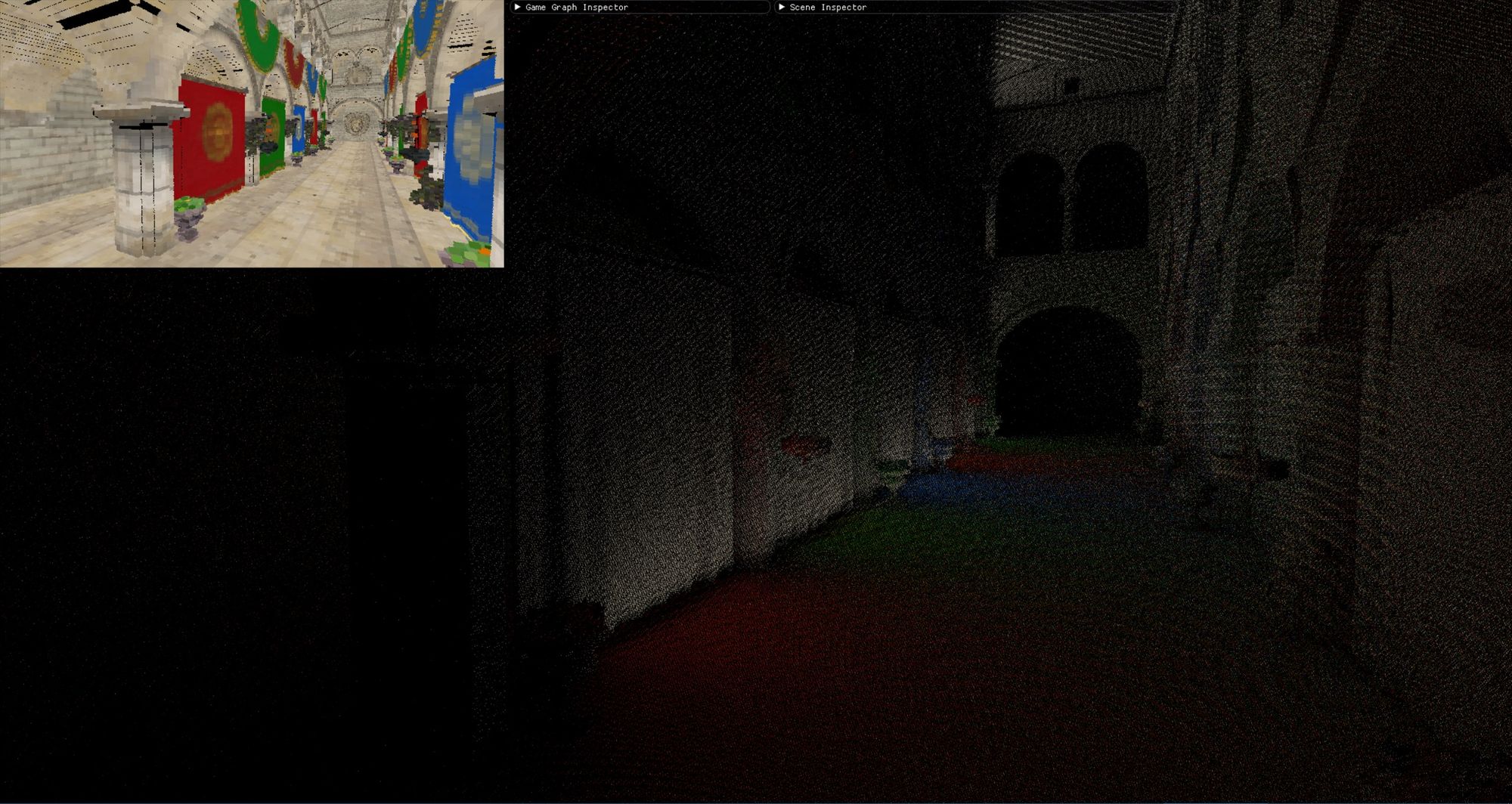

Demo video of global illumination achieved by voxel ray marcher:

References

- Jorge Jimenez, Xian-Chun Wu, Angelo Pesce, Adrian Jarabo. "Practical Realtime Strategies for Accurate Indirect Occlusion"

- Jorge Jimenez et. al, "Practical Realtime Strategies for Accurate Indirect Occlusion (slides)". SIGGRAPH 2016.

- The Non-Orientable Manifold Editor project https://github.com/cyj0912/Nome3/ Provides RHI for this project

- VXGI https://developer.nvidia.com/vxgi Nvidia

- Image-Space Horizon-Based Ambient Occlusion. Louis Bavoil, et al. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.214.6686&rep=rep1&type=pdf Nvidia

- The Basics of GPU Voxelization https://developer.nvidia.com/content/basics-gpu-voxelization Nvidia

- GPU Gems 3 Chapter 19. Deferred Shading in Tabula Rasa https://developer.nvidia.com/gpugems/GPUGems3/gpugems3_ch19.html Nvidia

- GPU Gems 3 Chapter 12. High-Quality Ambient Occlusion https://developer.nvidia.com/gpugems/GPUGems3/gpugems3_ch12.html Nvidia

- Introduction to Real-Time Ray Tracing with Vulkan https://devblogs.nvidia.com/vulkan-raytracing/ Nvidia

- Deferred Shading https://learnopengl.com/Advanced-Lighting/Deferred-Shading LearnOpenGL

- Imaged Based Lighting - Diffuse Irradiance https://learnopengl.com/PBR/IBL/Diffuse-irradiance LearnOpenGL

Contributors

Toby Chen:

- Architected and designed RHI.

- Implemented

pipelang, a Lua-based DSL for specifying RHI pipeline components and their interactions. - Parts of the Rendering Pipeline.

- Scene Graph and Model Loading.

Bob Cao:

- Implemented Voxel Cone Tracing pipeline.

- Incorporated a wide variety of visual effects shaders for more realistic rendering.

- Debugged and finished GTAO implementation and blurring.

- Implemented Entity/Component system with events and signaling

Gui Andrade:

- Researched and provided initial GTAO implementation based on SIGGRAPH algorithm description.

- Implemented and debugged camera transforms and motion, and hooked user input.

- Implemented physically-based rendering for more realistic material lighting.

- Ported RHI and demo to Linux.